Table Of Contents

AI is already in schools. Teachers are using it—sometimes officially, sometimes quietly—to brainstorm lessons, adapt texts, write questions, and save time wherever they can.

And yet, beneath that adoption is a tension we kept hearing over and over again: This could help me, but I don’t fully trust it.

That tension is where this story begins. 💭

The AI adoption paradox no one talks about

Educators see the promise of AI. They’re under real pressure to plan faster, differentiate more effectively, and meet the needs of increasingly diverse classrooms. In theory, AI should help.

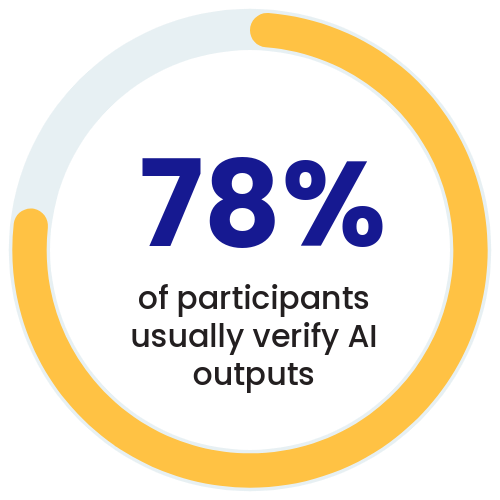

But in practice, many teachers are spending more time verifying outputs, rewriting content, and second-guessing what AI produces, especially when sources are unclear or absent.

In our global beta test of Britannica Studio, nearly 78% of educators told us they routinely verify AI-generated content before using it—not because they don’t understand AI, but because accuracy, rigor, and trust still matter deeply in classrooms.

🔗 The Trust Blueprint White Paper

That disconnect between AI’s promise and its day-to-day reality is what we wanted to understand better.

What happens when AI is built for teaching, not just generating?

Rather than asking educators what they think about AI, we invited them to use it, freely, authentically, and over time.

Nearly 300 educators across roles, grade levels, and subject areas participated in the Britannica Studio beta test. Most were not existing Britannica users. All were encouraged to use Studio in real instructional contexts, not demos or simulations.

What we learned surprised us, not because teachers rejected AI, but because they were very clear about what it must do to be useful.

Trust isn’t a promise. It’s an outcome.

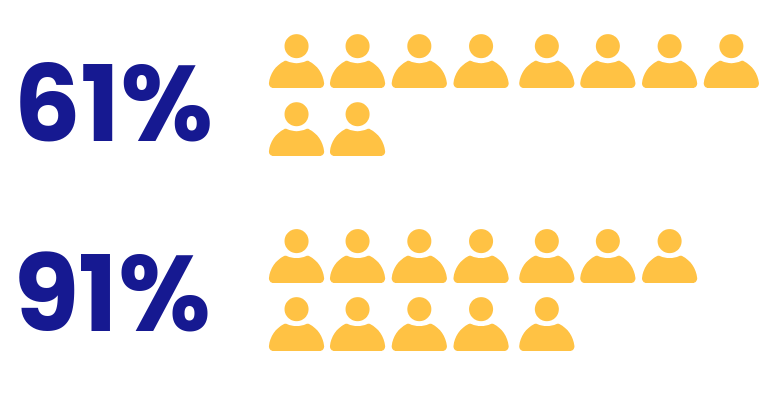

One of the clearest findings from the beta test was how trust evolved.

Early on, only 61% of educators felt very confident in the accuracy of Studio’s outputs. But after continued use, that number rose to 91%, with no respondents reporting low confidence by the end of the study.

🔗 The Trust Blueprint White Paper

Why the shift?

Educators pointed to two things:

- Transparent sourcing they could see and verify

- Consistent accuracy that reduced the need to rework content

Trust wasn’t abstract. Teachers described it practically: fewer corrections, less mental load, more confidence using materials directly with students.

Differentiation is still the hardest part of the job.

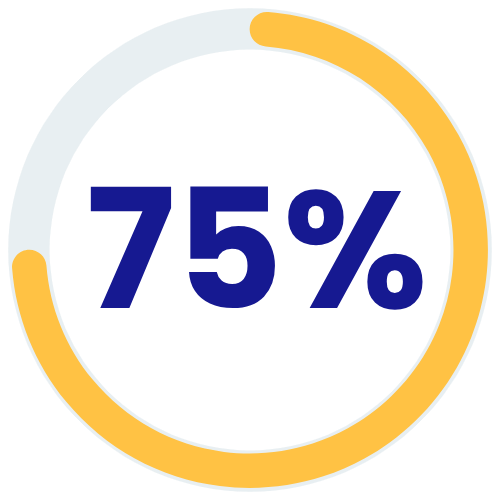

Across surveys and interviews, one challenge came up again and again: differentiation.

Supporting multilingual learners, emerging readers, and advanced students usually means creating multiple versions of the same material—work that is essential but rarely sustainable.

Nearly 75% of beta test participants cited differentiation and grade adaptation as a core value driver. What mattered most wasn’t simplification, but preserving instructional rigor across levels.

🔗 The Trust Blueprint White Paper

This reinforced a key insight: AI that can’t differentiate accurately at scale isn’t solving real classroom problems.

Workflow matters more than features.

Another unexpected takeaway wasn’t about any single tool, it was about a coherent workflow.

Educators consistently described Studio as simple, efficient, and clear because it mirrors how they already plan: creating content, adapting it, building assessments, and refining materials in one place.

AI adoption, it turns out, isn’t just about what tools can do. It’s about whether they fit into how teaching actually happens.

Responsible AI is a teaching issue, not just a policy one.

Perhaps most importantly, educators were clear that AI should support, not replace, professional judgment.

They valued being able to edit, adapt, and make decisions at every step. Many also saw transparent sourcing as a way to model AI literacy for students, helping them understand how information is accessed and evaluated.

Responsible AI, in other words, isn’t only about safety and compliance. It’s about pedagogy.

These insights and many more are explored in detail in The Trust Blueprint, our new white paper based on the full Britannica Studio beta study.

The Trust Blueprint white paper dives deeper into:

- How trust in AI changes over time

- Why differentiation and workflow drive real adoption

- What responsible AI design looks like in practice

- What schools and districts can take away as they navigate AI decisions

Whether you’re shaping policy, supporting teachers, or evaluating AI tools, we hope these details about Britannica Studio add clarity to a conversation that often feels louder than it is helpful.

The findings shared here are drawn from a global beta study of Britannica Studio, an AI workspace created specifically for instructional use. Studio pairs Britannica’s verified content with tools that support differentiation, workflow coherence, and teacher oversight. It’s designed not as a shortcut, but as an instructional partner—one that respects the complexity, judgment, and expertise that shapes the art of teaching.

About the Author

Joan Jacobsen

Chief Product Officer

Joan brings more than 25 years of experience developing award-winning products for the classroom. She began her career as a classroom teacher before focusing on educational technology and product development. She has been instrumental in developing many high-quality, engaging solutions that support teachers and drive student success including CODE and EdTech Breakthrough Award winners.

Recent Posts

Join Our Newsletter

From tips and tricks to engaging activities, find

attention-grabbing content for tomorrow’s lesson.